Data is central to every organization, and it has to be efficiently stored and managed. Data quality affects the quality of the resulting analysis. Poor data can lead to poor conclusions which can be a major setback in an organization. The quality of data has a great influence on the effectiveness of marketing campaigns. One of the major reasons for poor data quality is human error. Having accurate and business-ready data is absolutely integral for any business and managing the quality data can seem like an overwhelming task. However, a reliable data entry service could assist businesses to record data accurately and also ensure its quality.

There are many tools available today that help to analyze, manage and scrub data from numerous sources including databases, e-mail, social media, logs, and the Internet of Things. These tools remove errors, typos, redundancies and inconsistencies, and with proper data quality management businesses can perform data mapping, data consolidation associated with extract, transform and load (ETL) tools, data validation reconciliation, sample testing, data analytics and all forms of Big Data handling.

As there are several data quality tools available, it is important to choose the right ones because this is the data that flows across the organization, and networks. Here are some of the top-class data quality tools available.

- Cloudingo: This is a data cleansing tool designed for Salesforce. It can spot duplication, data inconsistencies and human errors. It provides data imports, high flexibility and control, and improved security protections.

(Image source: https://cloudingo.com/)- It provides strong security controls that include permission-based logins and simultaneous logins and it also supports separate user accounts and tools for auditing who has made the changes.

- This app uses drag – drop interface for eliminating coding and spreadsheets and has templates with filters that offer customization, and have built-in analytics. It supports both REST and SOAP.

- This tool has all major requirements like merging duplicate records and converting leads to contacts, deduplicating import files, deleting stale records, automating tasks on a schedule, and providing detailed reporting functions about change tracking.

- IBM InfoSphere Quality Stage: This data quality app is available on-premise or in the cloud. It provides a comprehensive approach to data cleansing and data management. It focuses on establishing consistent and accurate views of customers, vendors, locations and products. It is designed for big data, business intelligence, and data warehousing.

- It has various features designed to produce quality data and helps to understand the content quality and structure of tables, files and other formats.

- The platform offers more than 200 built-in data quality rules that control the ingestion of bad data. The tool can direct the problems to the right person so that underlying data problems can be addressed.

- It supports strong governance and rule-based data handling and has strong security features.

- Data classification feature identifies personally identifiable information (PII) that includes taxpayer IDs, credit cards, phone numbers and other data. This helps eliminate duplicate records or orphan data that can end up in the wrong hands.

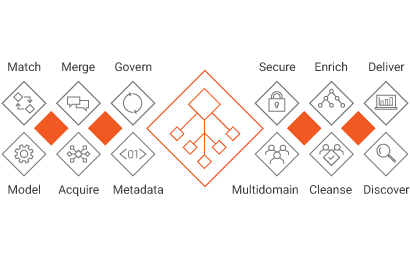

- Informatica Quality Data and Master Data Management: Its framework handles a huge range of tasks that are related with data quality and Master Data Management. It has role-based capabilities, artificial intelligence insights into issues, prebuilt rules and accelerators and comprehensive data quality transformation tools.

(Imagesource:https://www.informatica.com/services-and-training/glossary-of-terms/master-data-management-definition.html)- It is good at handling data standardization, validation, enrichment, consolidation and deduplication.

- The vendor provides Master Data Management (MDM) application that addresses data integrity through matching and modelling, metadata and governance and cleansing and enriching. It also automates data profiling, discovery, cleansing, enriching, matching and merging with a single central repository.

- This supports all types of structured and unstructured data including applications, legacy system product data, third-party data, online data, interaction data and IoT data.

- Data ladder: They are the leaders in data cleansing through a comprehensive set of tools that clean, match, dedupe, standardize and prepare data. It is designed to integrate, link and prepare data from nearly any sources.

(Image source: https://dataladder.com/)- Data Ladder supports integration with a huge range of databases, file formats, big data lakes, enterprise applications and social media. It has templates and connectors for managing, combining and cleansing data. Data Ladder includes Microsoft Dynamics, Sage, Excel, Google Apps, Office 365, SAP, Azure Cosmos database, Amazon Athena, Salesforce and so on.

- It aims at delivering an accuracy rate of 96 percent based on independent analysis. It uses multi thread in–memory processing to boost speed and accuracy.

- It has data standardization feature that draws on more than 300,000 pre-built rules and also allows customization.

- SAS Data management: This is designed to manage data integration and cleansing. It has powerful tools for data governance, metadata management, ETL and ELT, migration and synchronization capabilities, a data loader for Hadoop and a metadata bridge for handling big data.

- It offers a powerful set of wizards that help in data quality management and it includes tools for data integration, process design, metadata management, data quality controls, ETL and ELT, data governance, migration and synchronization.

- It has strong metadata management capabilities to maintain accurate data. It has mapping, data lineage tools that validate information, and wizard-driven metadata import and export, and column standardization capabilities that help with data integrity.

- Data cleansing takes place in native languages with specific language awareness and location awareness for 38 regions. This app also supports reusable data quality.

- OpenRefine: OpenRefine was earlier known as Google Refine which is a free source tool for managing, manipulating and cleansing data. It can accommodate a few hundreds or thousands of rows of data. It cleans, reformats, and transforms diverse and disparate data and is available in several languages including English, Chinese, Spanish, French, Italian, Japanese and German.

- It cleans and transforms data from various sources including the web and social media data.

- It has powerful tools to remove formatting, filter data, rename data, add elements and accomplish numerous other tasks.

- It has the ability to reconcile and match diverse data sets which makes it possible to obtain, adapt, cleanse and format data for web services, websites and numerous database formats.

- Syncsort Trillium: This is a leader in the data integrity space and it has five versions of the plug and play application, and addresses different tasks within the overall objective of optimizing and integrating accurate data.

- It cleanses and optimizes data lakes with machine learning and advanced analytics; it picks out dirty and incomplete data and helps deliver actionable business decisions.

- This application can be used on premise or in the cloud, and supports more than 230 countries, regions and territories.

- It helps to find missing, duplicate and inaccurate records. It has the ability to add missing postal information as well as latitude and longitude data, and other key types of reference data.

- Talend Data Quality: Talend focuses on producing and maintaining clean and reliable data through advanced framework that includes machine learning, pre-built connectors and components, data governance and management and monitoring tools. It addresses data deduplication, validation and standardization.

- This application uses graphical interface and drill capabilities that display details about data integrity. It allows users to evaluate data quality and measure performance against internal or external metrics and standards.

- The application enforces automatic data quality error resolution through enrichment, harmonization, fuzzy matching and de- duplication.

- It offers four versions of data quality software. These include two open source versions with basic tools and features, and a more advanced subscription-based model that includes robust data mapping.

- TIBCO Clarity: It emphasizes cleansing large volumes of data to develop rich and accurate data sets. This application is available in on-premise and cloud versions. It includes tools for profiling, validating, standardizing, transforming, deduplicating, cleansing and visualizing for all major data sources and file types.

- It offers a powerful deduplication engine that supports patterns-based searches to find duplicate records and data. It allows users to deploy match strategies based on a wide range of criteria, including columns, thesaurus tables and other criteria including across multiple languages.

- It supports strong editing functions that let users manage columns, cells and tables.

- The address cleansing function works with TIBCO Geo Analytics as well as Google Maps and ArcGIS.

- Validity Demand tools: It delivers a robust collection of tools designed to manage CRM data within Salesforce. The product accommodates large data sets and identifies and deduplicates data within any database table.

- It focuses on providing a comprehensive suite of data integrity for sales force and Demand Tools, compares both internal and external data sources to deduplicate, merge and maintain data accuracy.

- Demand Tools come with many powerful features including the ability to reassign ownership of data. Find/Report module allows users to pull external data, such as an Excel spreadsheet or Access database, into the application and compare it to any data residing inside a Salesforce object.

- Validity JobBuilder is another tool that automates data cleansing and maintenance tasks by backing up data, merging duplicate data, and handling updates based on stipulated rules and conditions.

Data quality tools ensure that the data used in organizations is accurate, reliable and error free. Good data leads to better functioning of organizations, and with quality data all basic operations of the organization can be performed quickly and efficiently. Data completeness and relevance can be achieved by properly managing data quality in the organization. Outsourcing the task of data processing to a reliable data entry service will ensure that the entered data is accurate. These services offer high quality data entry for a wide range of industries. Their skilled and experienced workers and advanced technology ensure that business data is managed in a timely and efficient way. They maintain confidentially of their clients’ data and provide customized service according to client requirements at affordable rates.